Experiment Runs overview

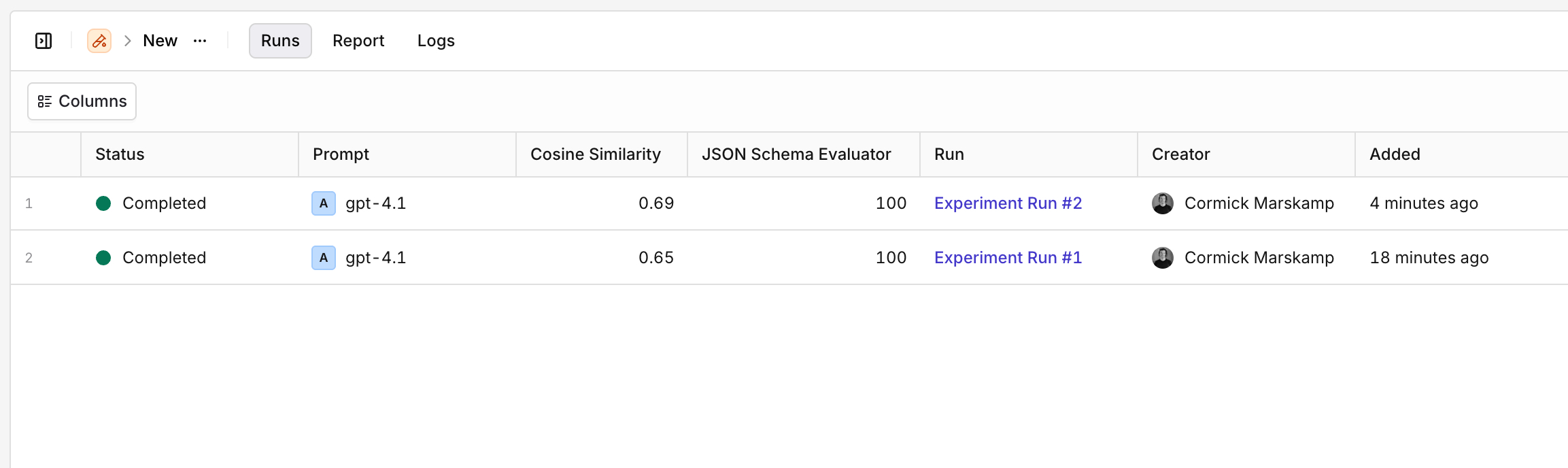

You can now compare different Experiment runs in a single overview using the new Runs tab.

Previously, reviewing the results of multiple experiment runs required clicking into each run individually and manually comparing scores. This made it harder to spot improvements or regressions at a glance.

With the new Runs overview, you get a clear, side-by-side comparison of all your experiment runs, including all your evaluator scores. This makes it much easier to see if recent changes to your prompt or model setup have led to measurable improvements, or unexpected regressions.

Example:

In the screenshot below, you can see that both Experiment Run #1 and #2 achieved a perfect score on the JSON Schema Evaluator (100), while Cosine Similarity improved from 0.65 to 0.69.

No more clicking back and forth between runs, get instant insight into your progress in one place.

Experiment Runs overview