Caching

Add caching on a Deployment to improve processing time and cost of repeat queries.

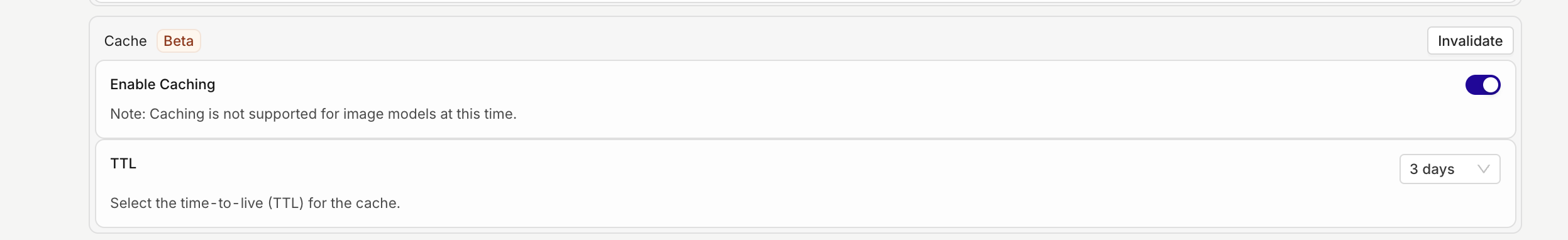

Enabling Cache on a Deployment

Deployments generation can be cached to reduce processing time and cost. When an input is received and cached already within the Deployment, the stored response will be sent back directly without triggering a new generation.

To enable caching head to a Deployment > Settings.

Select Enable Caching.

Caching happens Deployment-wide and currently doesn't support image models.

The cache only works when there is an exact match

Configuring TTL

TTL (time to live) corresponds to the amount of time a cached response will be stored and used before being invalidated. Once invalidated, a new LLM generation will be triggered.

You can configure the time-to-live once Caching is enabled choosing from the drop-down.

Invalidate Cache

At any time you can choose to invalidate the cache by pressing the Invalidate button.

Updated 27 days ago