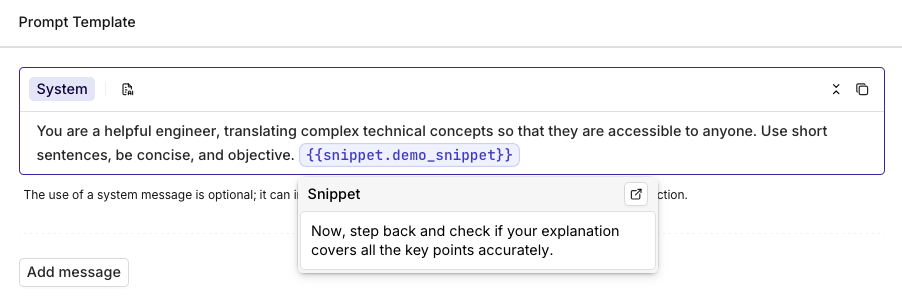

Prompt Snippet

Prompt Snippets are saved text to be used within your prompts. This is useful for text that you want to appear within multiple prompts. Furthermore, this lets you edit one snippet to update multiple prompts at the same time.

To get started see:

Updated 7 days ago