Custom LLM Evaluators

What are custom LLM Evaluators, how are they used in Experiments and how to create your own custom LLM Evaluator.

What are LLM Evaluators

LLM Evals are simply language models which you can instruct to evaluate the output of another language model. Example: LLM 1 evaluates the tone of voice of the output of LLM 2.

LLM Evaluators leverage language models to create any kind of automated check you want to perform on an Experiment. These checks can help you understand the performances of models as well as whether behaviors fit the hypothesis without resorting to manual control.

In this document we'll see how to create a LLM Evaluator and enable it for use within your Experiments.

How to Create a LLM Evaluator

To create a new LLM Evaluator, head to the Resources tab within the orq.ai panel and select Evaluators.

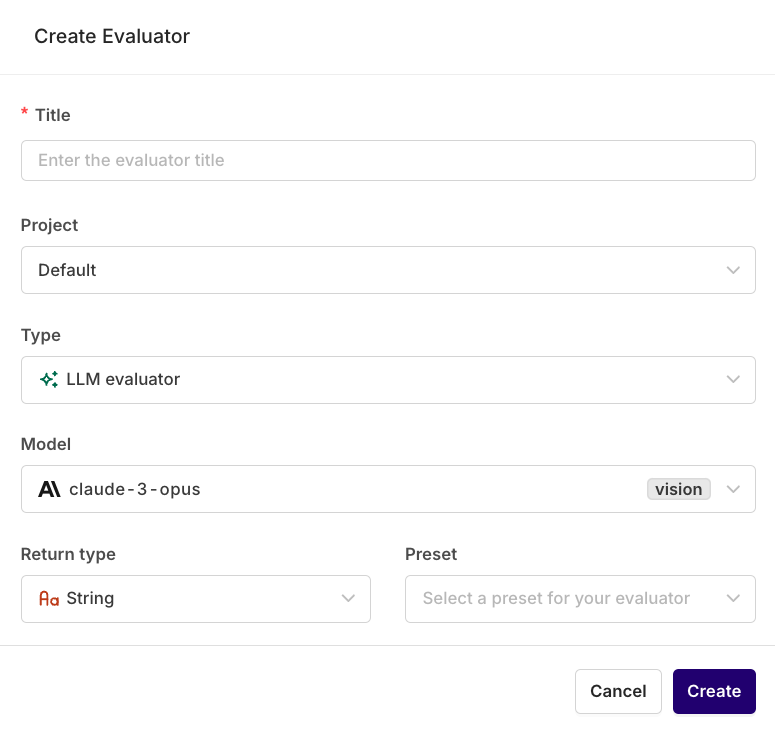

Next, select Create evaluator, you will be prompted with the following modal:

Here you can configure your evaluator title as well as its project.

Only LLM evaluator is currently available as Evaluator Type.

You can select a Model, Return Type and Preset for your Evaluator, all parameters can be changed later on.

The LLM Evaluator creation modal where you can begin your evaluator configuration.

Selecting Create takes you to the Evaluator configuration page where you can define the prompts and details for your Evaluator to run.

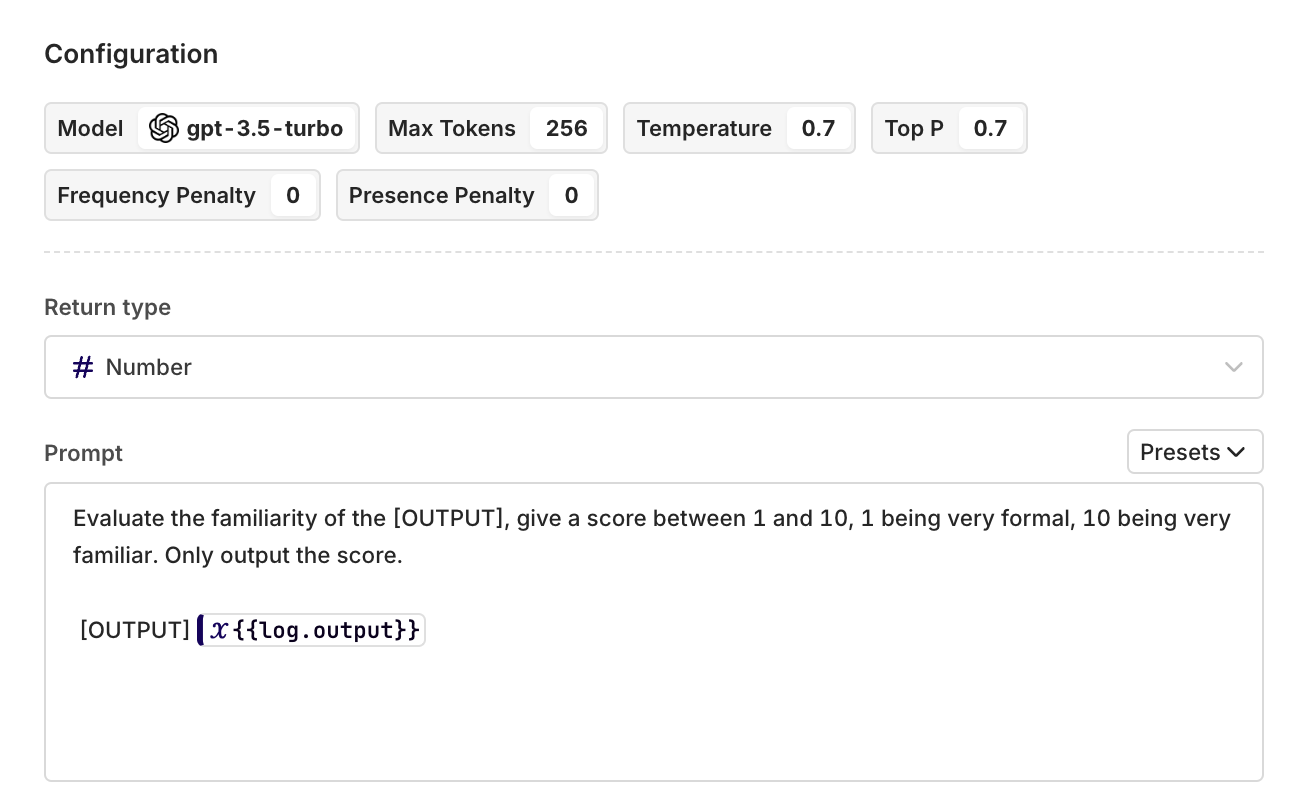

Model Configuration

In this section, you can define which model you want to use to run the evaluation. You can choose any model that can generate text, as its answer need to match the chosen Return Type.

Here are the available Return Types to choose from and examples of evaluators using these types. Note: The return type does not affect the behavior of the LLM eval! It's just for Orq to know how to visualize the outcome.

| Return Type | Description |

|---|---|

| String | Your evaluator can return any string of text. |

| Boolean | Your evaluator returns true or false. |

| Number | Your evaluator returns a Number as a response. |

Once you have chosen a Return Type you can configure the prompt used within your LLM Evaluator.

An example prompt and configuration for an evaluator.

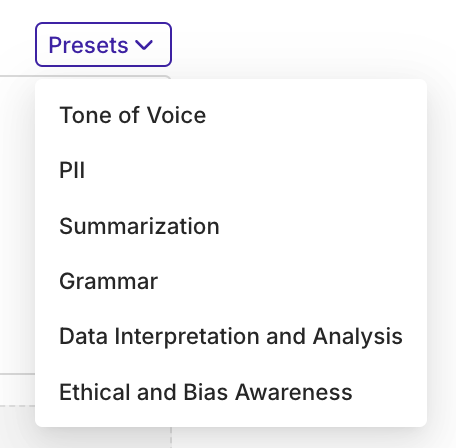

At any time you can choose one of our presets to see how a standard Evaluator can be created from an LLM prompt. Select the Presets button and choose from one of options.

The available presets to choose from.

To create your evaluator prompt, you have multiple variables available for your use. These variables will be replaced at Experiment Runtime with the corresponding values.

| Variable | Description |

|---|---|

{{log.input}} | The messages template used to generate the output |

{{log.output}} | The generated response from the model |

{{log.reference}} | The reference used to compare the output |

{{log.latency}} | The time taken to generate the response in milliseconds |

{{log.input_cost}} | The cost of the input message in tokens |

{{log.output_cost}} | The cost of the generated response in tokens |

{{log.total_cost}} | The total cost of the input and output in tokens |

{{log.input_tokens}} | The tokens used in the input message |

{{log.output_tokens}} | The tokens used in the generated response |

{{log.total_tokens}} | The total tokens used in the input and output |

To save your Evaluator press the Save changes button at the top-right of the page.

From now on, you can use your LLM Evaluator just like any Standard Evaluator within Experiments, to learn more see Evaluators in Experiments.

Updated 4 months ago