Evaluators in Experiments

How to configure and use Evaluators within Experiments

Adding Evaluators to your Experiment allows you to quantitatively evaluate the model-generated outputs. Using standard scientific methods or custom LLM-based evaluations, you can automate the scoring of models to quickly detect whether models fit a predefined hypothesis and if they stand out from one another.

For each predefined model, evaluators execute after generation to test and score the output. Scores and results can be visualized within the Experiment panel.

Adding Evaluators to Experiment

To add an Evaluator to an experiment, head to the Configuration tab.

In the Eval section, select Add Eval to add a new Evaluator to your Experiment.

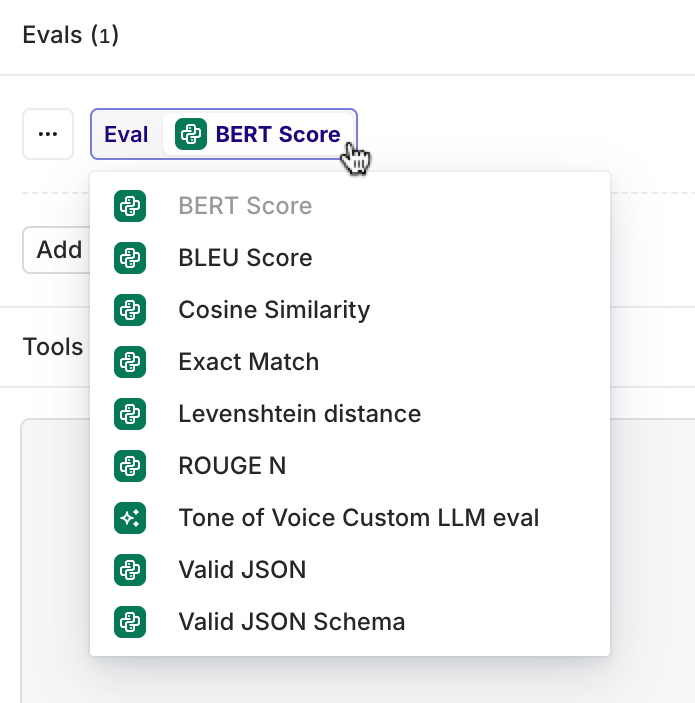

A new Evaluator will appear on your panel, clicking on its name will let you choose the one desired for your Experiment. You can add as many evaluators as desired within your Experiment.

Click on the Evaluator name to choose between the available ones.

Available Evaluators can be found within your Evaluators page. Here, you can view all Standard Evaluators and create your own Custom LLM Evaluators.

Configuring Evaluator Reference

Some Evaluators need a reference to run. For example, to assess the similarity between two pieces of text, one piece must be used as a reference to which the other is compared.

Within Experiments, you have two ways of defining the reference used by your Evaluators.

Reference from Dataset

When configuring your Datasets, you can enter a reference on the right side of the screen. This dataset reference will then be used by the Evaluators.

Reference from a Model Output

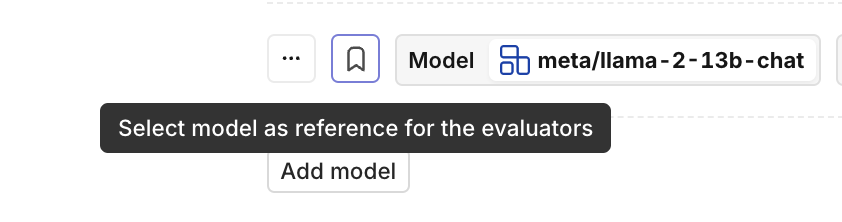

You can define a model as a reference model for your Evaluators to run against. Within the Experiment, the model's generated output will be used as a reference for your Evaluators.

To select which model to use as a reference, select the reference button next to the model name.

Only one model can be selected as a reference for an experiment.

Viewing Evaluator Result

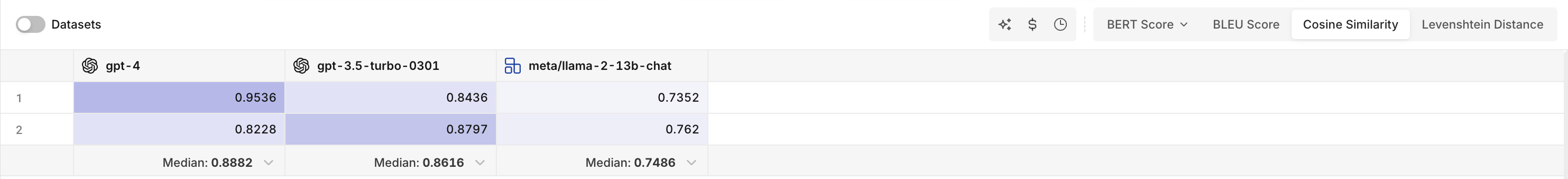

Once an Experiment has been run, you can view the Evaluator results on the Report page.

Here, next to the results, cost, and latency for each model, you can toggle and view scores for each of the Evaluators configured for the Experiment.

As you select one Evaluator, the table will display results in line with your models and datasets run.

Cells will be colored depending on score, to help identify outliers in results at a glance.

Updated 2 months ago