Setting up a Playground

A walkthrough of all functionalities within Playgrounds.

The Playground offers you a way to quickly test hypotheses and prompts on multiple models simultaneously. To learn more about the use cases of Playgrounds, see use cases for Playgrounds.

In this walkthrough, we will review all functionalities of the Playground, from initial creation to advanced comparison of multiple models.

Creating a Playground

To get started with creating your Playground:

- Login to your Admin Panel.

- Go to the Playground tab.

- Select Create playground.

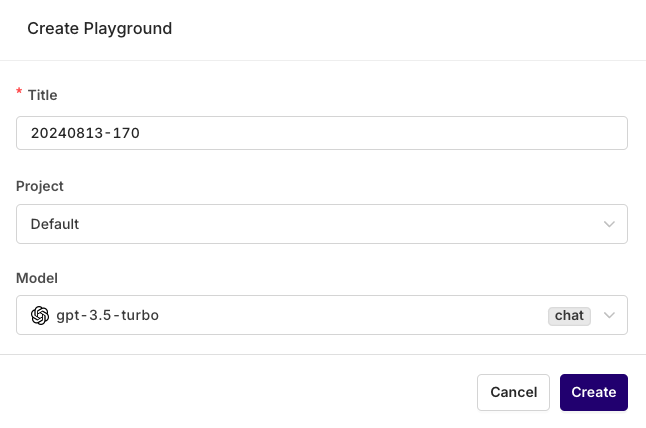

The following modal will open:

The modal shown during creation of a Playground. Here you can give a title to your Playground, choose which Project it belongs to, and select the model you want to use first. Everything can be modified later.

Parameters

The first step to preparing your Playground is configuring the parameters for the model you want to run.

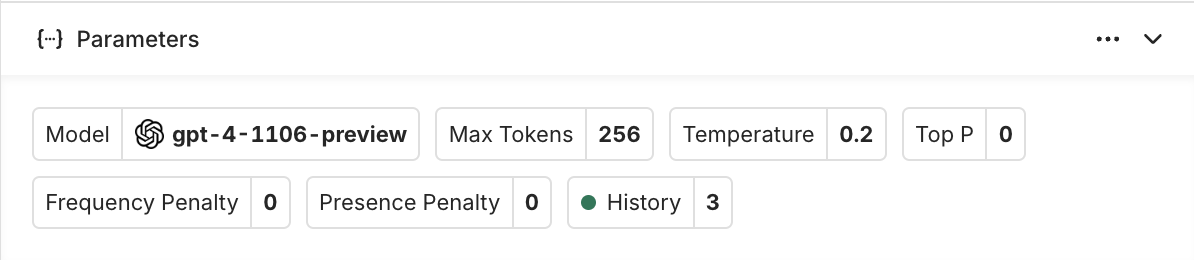

To configure your parameters, toggle the Parameters block at the top-left of the page.

To change the model used, click on the model name to open a list of available models.

In the Playground, you can test all available models through our API key. In other words, you don't have to set up your own API keys if you quickly want to test out models that you might not have access to. We do this because we want to make it as easy as possible for the user to quickly test out multiple models.

See which models are available in our Model Garden.

Model parameters

Setting up the right parameters is important. Especially the Max Tokens parameter, since you want to make sure that you allow the model enough tokens to use for the output.

When selecting a model, the block automatically updates with all corresponding parameters to configure the chosen model

To learn more about the most common model parameters, see Model Parameters.

History

History is a specific parameter available within the Playground. It defines how many previous message pairs the model should hold in memory during a conversation.

History affects the behavior of the model. It is important to specify the number of message pairs needed for the model to answer properly.

When setting history to 0, the model will not take any previous message pairs into account and act as a completion model, meaning: it won't remember what has been said in the past.

Prompt Template

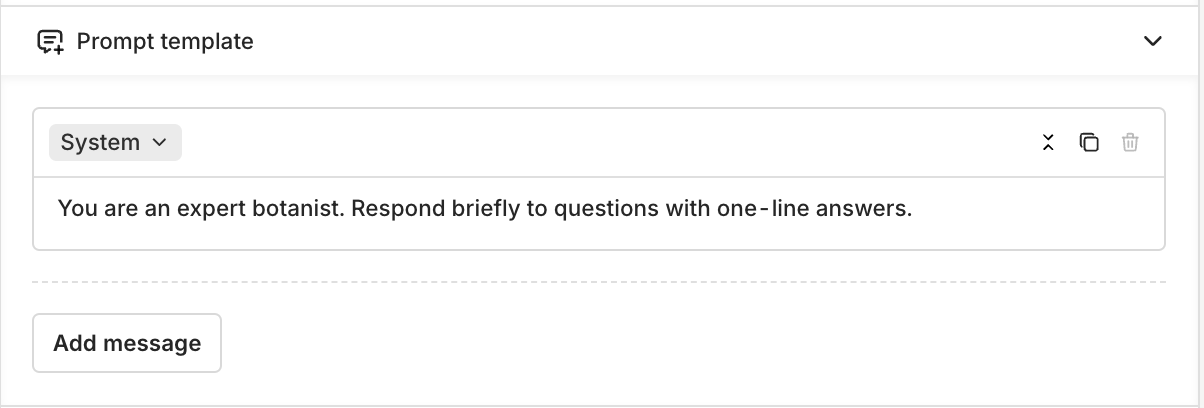

The next step is to define the prompt template. The template is designed so that the user can quickly iterate on their prompt.

After filling out your prompt, you can hit generate and the model will return an output.

When clearing the output (assistant messages) and possible follow-up messages, the prompt template will remain.

Messages

To start preparing messages for your model select Add Message in the Prompt Template panel.

Here, you will be able to enter a message that the model will receive before generating responses. This can be used to set the context to any hypothesis or prompt you want to test.

If you want to chat with the model, we advise you to do this outside of the prompt template.

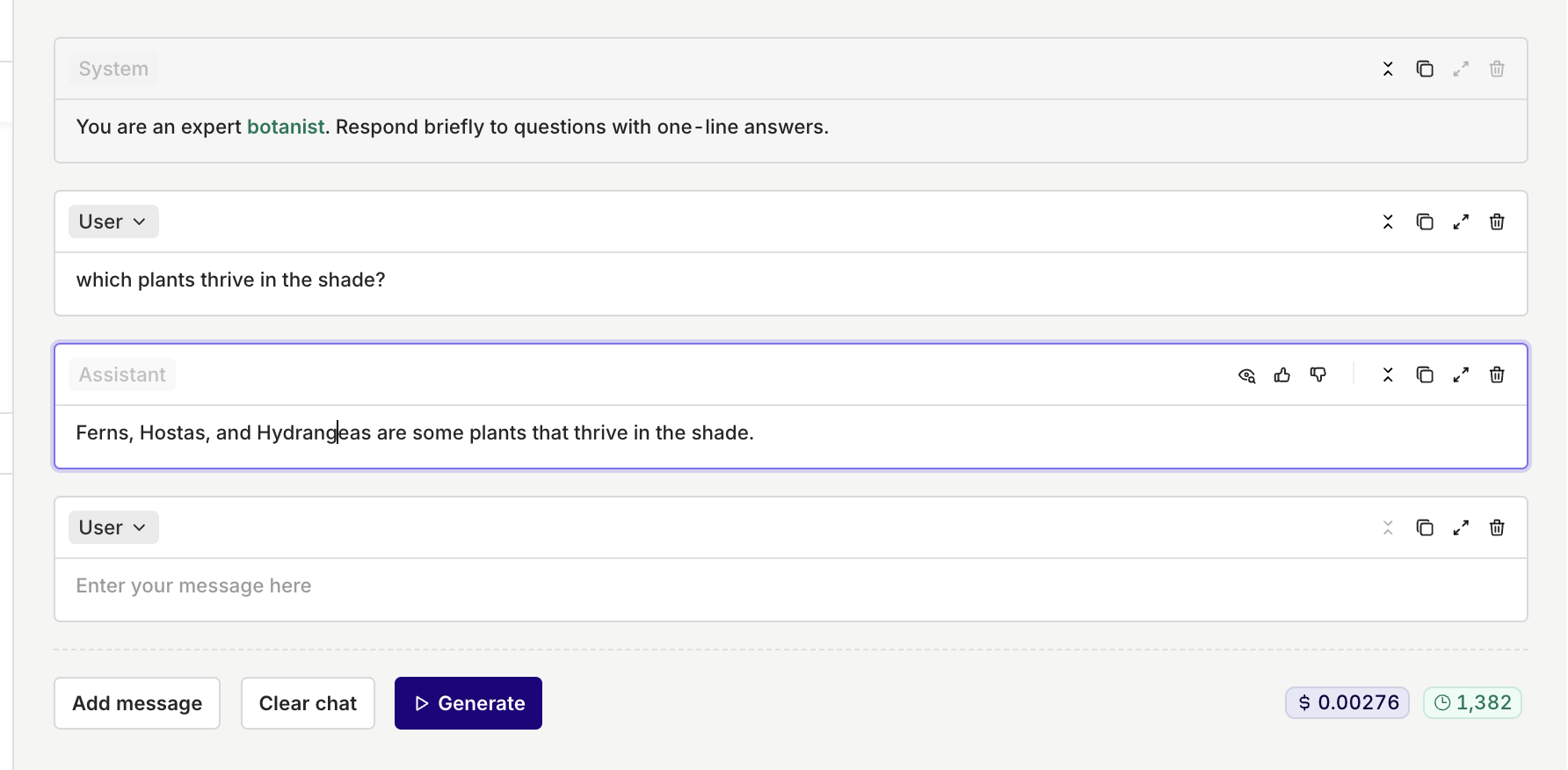

The prompt template that will be used as context for the language model to process queries.

Roles

When adding a message you can choose from different roles, roles are used for different use-cases when interacting with a language model.

| Role | Description | Example |

|---|---|---|

| System | A guideline or context for the language model, directing how it should interpret and respond to requests. | "You are an expert botanist. Respond briefly to questions with one-line answers." |

| User | An actual query posed by the user. | "Which plants thrive in shady environments?" |

| Assistant | Responses to user queries by the language model. | "Ferns, Hostas, and Hydrangeas are some plants that thrive in shady environments." |

Input Variables

You can use inputs within your Prompt Template to make them dynamic.

To add an input , simply type {{input_key}}within a message.

To see all declared inputs, select the Inputs block at the top-right of the page, here you can enter default values used during generation, for all inputs.

See where to insert the input and how it overwrites the input

Knowledge Bases

Knowledge Bases are designed to store and efficiently retrieve data from various file sources. When using compatible models you can configure a Knowledge Base to be queried within your prompt configuration.

To learn more about Knowledge Bases, see Setting up a Knowledge Base and Using a Knowledge Base.

Tools

With tools you can use Function Calling within your LLM call.

Function Calling lets you reliably generate structured output with a language model. This is especially helpful when integrating between your language model and other systems.

For example, function calling can generate a structured valid JSON payload for integrating with any API, with schema validation.

To learn more about Function Calling, see Function calling in Playground.

Generation

Once the Prompt Template is ready, you can generate an output and simulate a chat.

On the right side of the panel, you will see your template generated and ready to be conversed with.

You can add messages on top of the template messages and prepare the conversation for generation, to do so use the Add message button.

Once ready to be sent, you can select Generate.

You can see your responses being generated in real time. You can also continue the conversation and generate new answers.

On the bottom right of the panel you can see the cost and latency of the latest generated output.

Comparing multiple models

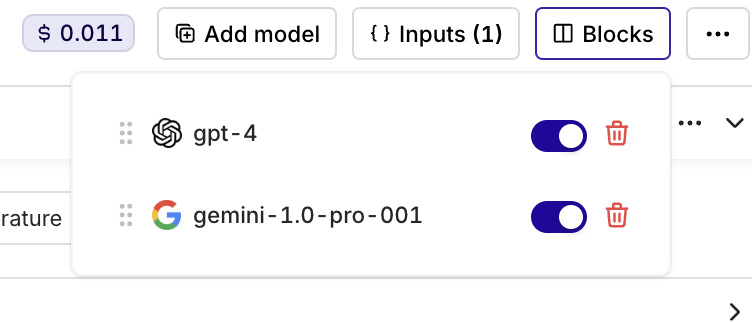

A strong feature of Playgrounds resides in comparing different models side-by-side.

To add another model to your Playground, press the Add Model button at the top-right of the panel.

This will split the panel view with a second column containing the new block.

Copying and Duplicating Models

Your additional model configuration is by default empty. You can decide to Copy the Prompt Template from one model to another by selecting the ... menu at the top-right of the block and then choosing Copy Prompt Template to one or all other models.

Likewise, you are also able to duplicate a model by selecting the ... menu and then choosing Duplicate Block

Blocks

Each model is located within one block in your Playground.

At any moment you can decide to deactivate a block by selecting the Blocks menu at the top-right of the panel and toggling which model you want displayed.

Use the Blocks menu to view all the models in your Playground. Toggle the models you want to see and select the trash button to delete a block.

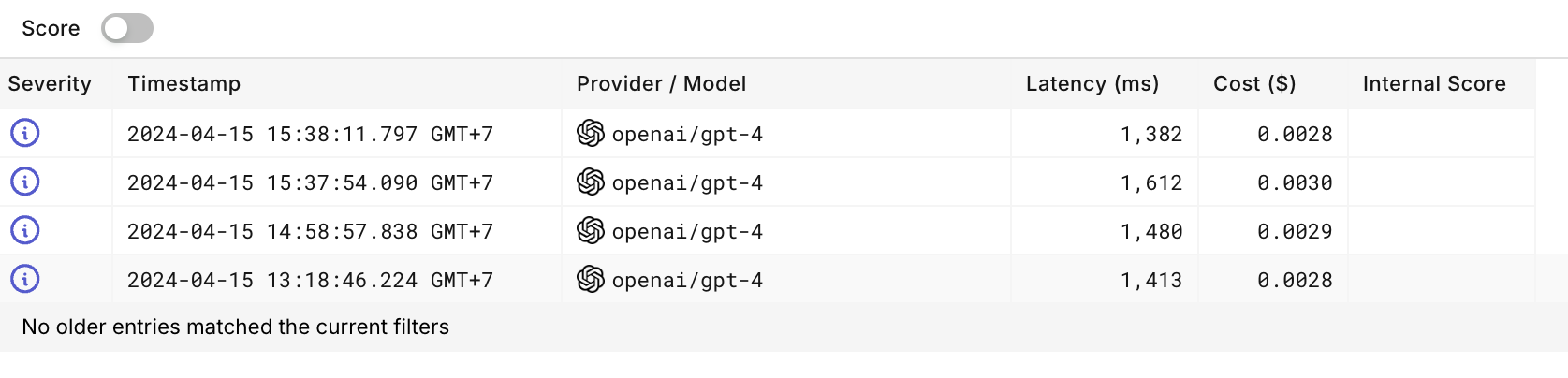

Logs

By selecting the Logs menu at the top-left of the Playground panel, you can view all LLM calls (generations) made during the lifetime of your Playground.

On the Logs page, you can see details for all LLM calls made when using your Playground.

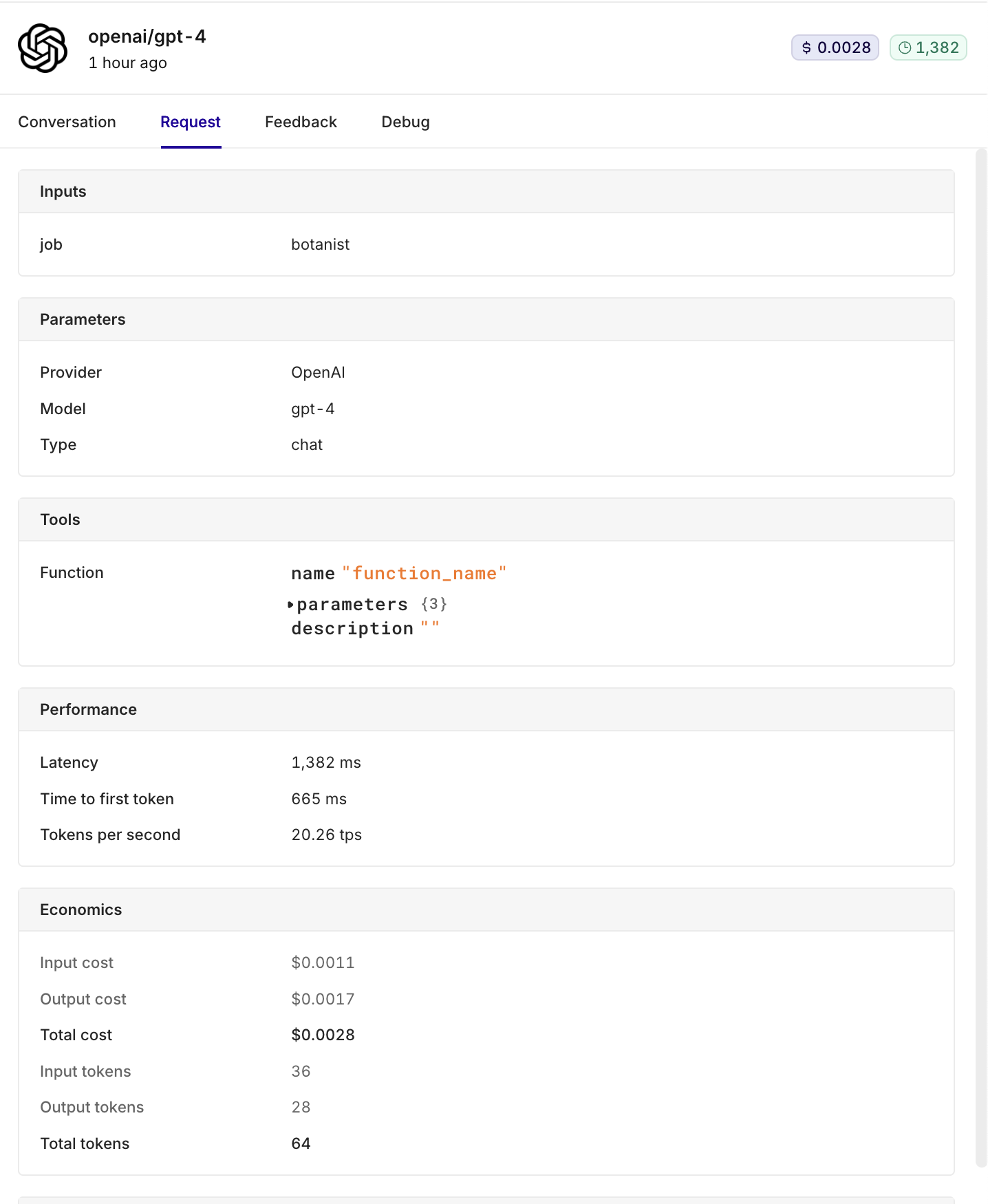

By selecting a single log, you can see further details for a call, including Conversation history, details of the Request, Feedback, and Debug information.

Details for a single LLM call

Hyperlinking from Logs

When viewing a single log, you can decide to copy the setup to Datasets, Experiments, or Deployments. This will copy the whole setup to the chosen module, allowing you to quickly switch between modules whilst keeping the same setup.

Select the ... menu at the top-right of the chosen log and select:

- Create Deployment to create a new Deployment from this configuration.

- Add variant to Deployment to add a new variant onto an existing Deployment.

- Add to Dataset to add the chosen configuration to an existing Dataset.

- Run in Experiment to add the chosen configuration to a new Experiment.

Updated 22 days ago