Feedback in Orq

Human in the loop lets you make feedbacks and corrections on your models responses within logs.

What is Human in the Loop

Human in the Loop is a way for you to collect feedback from your end users and have your domain expert annotate feedback and corrections on each log for future improvement.

Logging Feedback

Logging feedback is especially useful for domain experts to validate an output, flag defects, and mark interactions with responses generated by models.

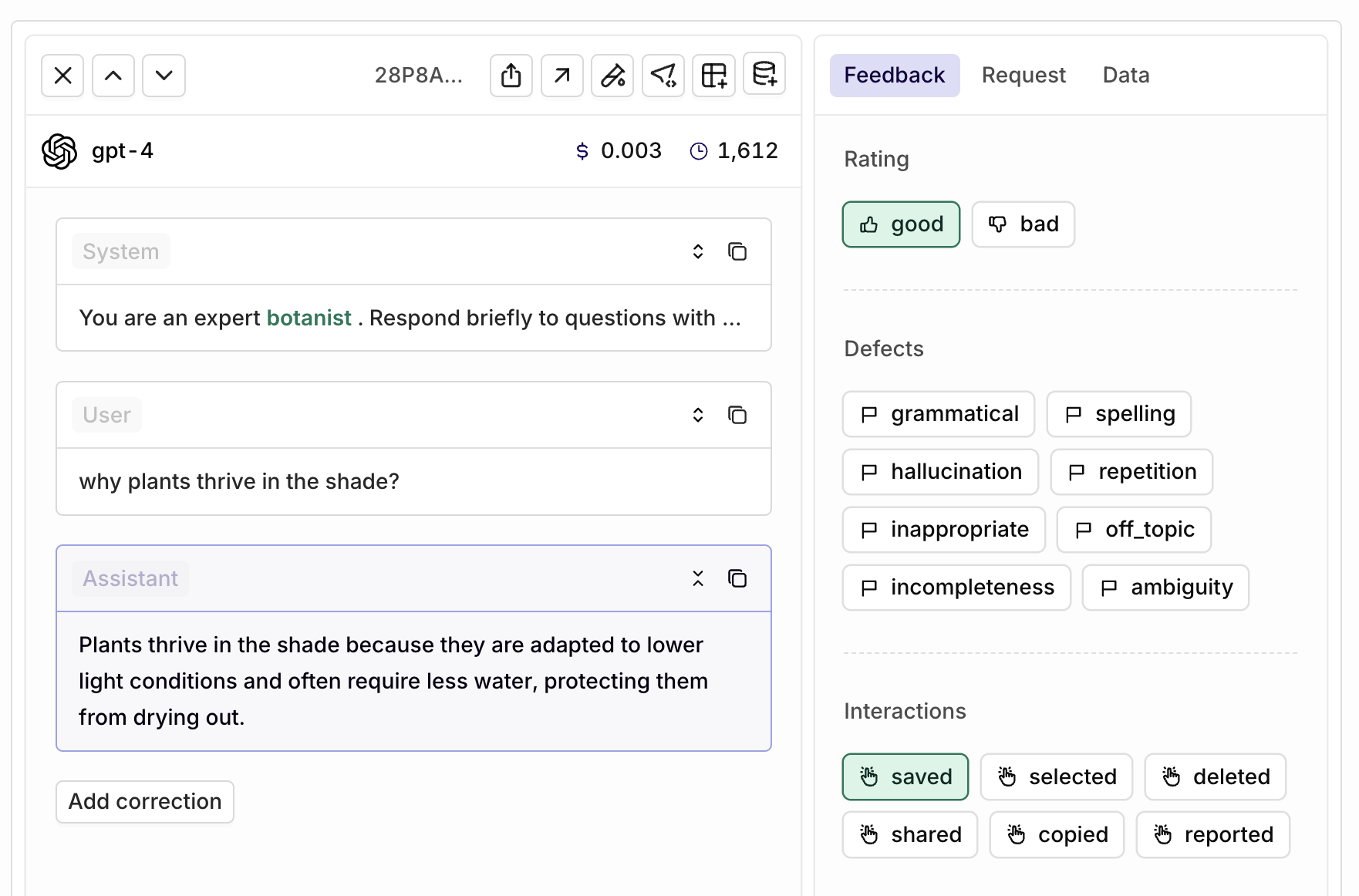

Within any module, by choosing the Logs tab and then selecting a single Log, the Feedback panel will be displayed on the right.

Within the Log detail panel, the following Feedback panel will be available on the right, letting you qualify the selected generation.

There are three different types of feedback available in the Feedback panel.

To learn more about all feedback types: Ratings, Defects and Interactions, see Feedback types.

Making Corrections

Aside from logging feedback, you are also able to correct a generation to the desired response. This is useful when adding the response to a Curated Dataset.

To add a correction, first find the desired response by choosing the Logs tab, then selecting a single Log.

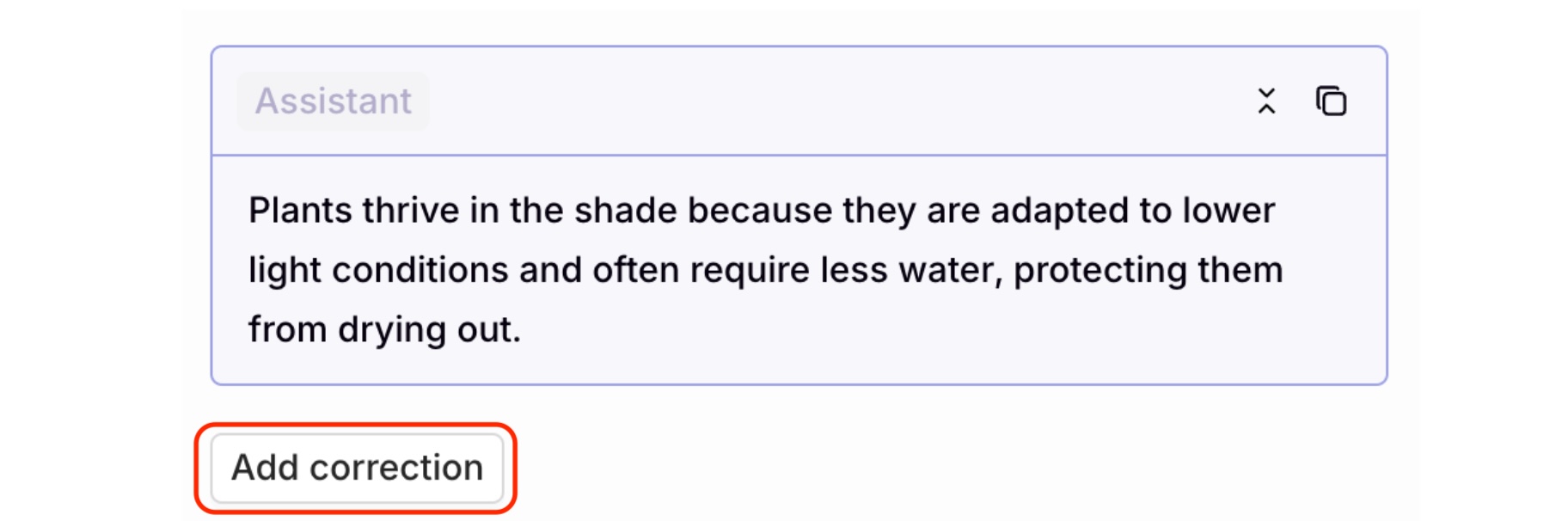

Below the generated response, find the Add correction button:

The Add correction button is below Assistant response.

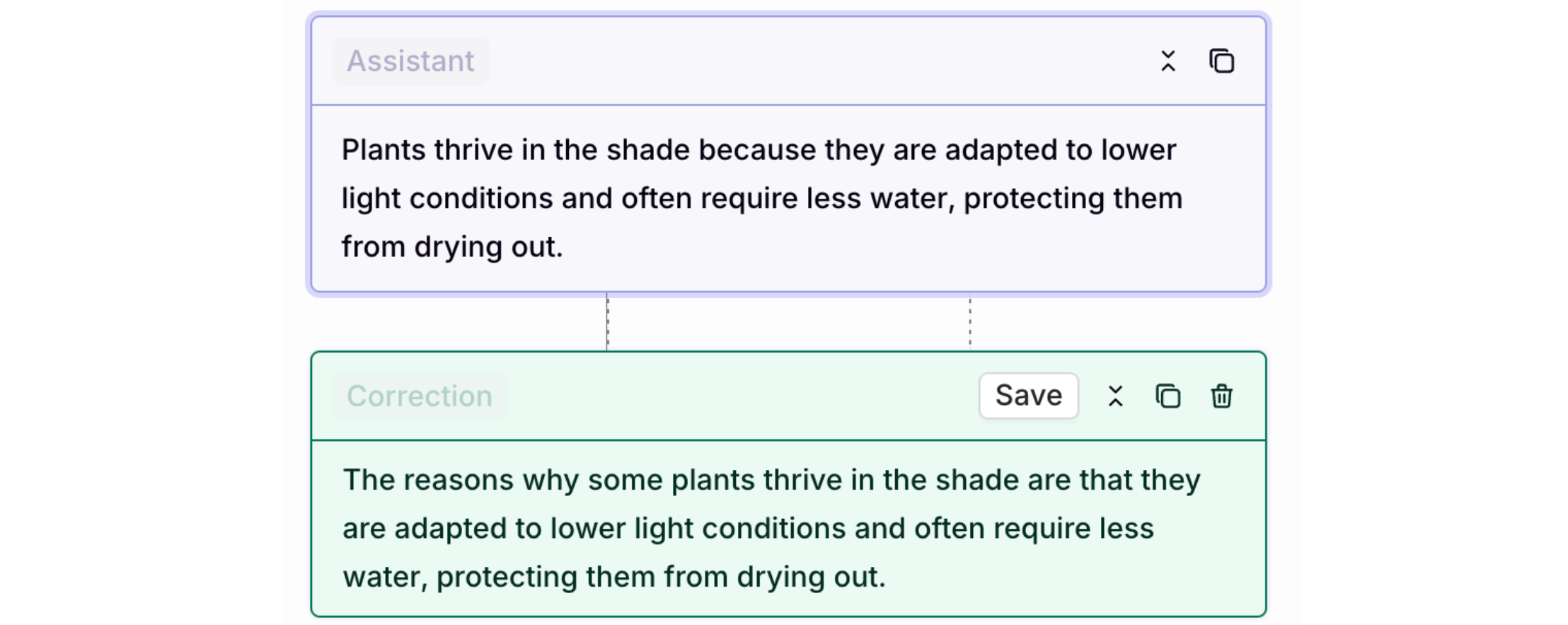

Choosing to add a correction will open a new Correction message in which you can edit manually the response provided by the model. Once you have finished editing, select Save to use that correction.

The corrected text and correction will appear next to one another, the correction is displayed in green.

Corrections are a great way to fine tune your models, to learn more, see Curated datasets.

Updated about 1 month ago