LiteLLM

Integrate Orq.ai with LiteLLM using OpenTelemetry

Getting Started

LiteLLM provides a unified interface for multiple LLM providers, enabling seamless switching between OpenAI, Anthropic, Cohere, and 100+ other providers. Tracing LiteLLM with Orq.ai gives you comprehensive insights into provider performance, cost optimization, routing decisions, and API reliability across your multi-provider setup.

Prerequisites

Before you begin, ensure you have:

- An Orq.ai account and API Key

- LiteLLM installed in your project

- Python 3.8+

- API keys for your LLM providers (OpenAI, Anthropic, Cohere, etc.)

Install Dependencies

# Core LiteLLM and OpenTelemetry packages

pip install litellm opentelemetry-sdk opentelemetry-exporter-otlp

pip install 'litellm[proxy]'

Configure Orq.ai

Set up your environment variables to connect to Orq.ai's OpenTelemetry collector:

Unix/Linux/macOS:

export OTEL_EXPORTER_OTLP_ENDPOINT="https://api.orq.ai/v2/otel/v1/traces"

export OTEL_EXPORTER_OTLP_HEADERS="Authorization=Bearer <ORQ_API_KEY>"

export OTEL_RESOURCE_ATTRIBUTES="service.name=litellm-app,service.version=1.0.0"

export OPENAI_API_KEY="<YOUR_OPENAI_API_KEY>"Windows (PowerShell):

$env:OTEL_EXPORTER_OTLP_ENDPOINT = "https://api.orq.ai/v2/otel/v1/traces"

$env:OTEL_EXPORTER_OTLP_HEADERS = "Authorization=Bearer <ORQ_API_KEY>"

$env:OTEL_RESOURCE_ATTRIBUTES = "service.name=litellm-app,service.version=1.0.0"

$env:OPENAI_API_KEY = "<YOUR_OPENAI_API_KEY>"Using .env file:

OTEL_EXPORTER_OTLP_ENDPOINT=https://api.orq.ai/v2/otel/v1/traces

OTEL_EXPORTER_OTLP_HEADERS=Authorization=Bearer <ORQ_API_KEY>

OTEL_RESOURCE_ATTRIBUTES=service.name=litellm-app,service.version=1.0.0

OPENAI_API_KEY=<YOUR_OPENAI_API_KEY>Integrations

Choose your preferred OpenTelemetry framework for collecting traces:

LiteLLM

Auto-instrumentation with minimal setup:

import litellm

# Use just 1 line of code, to instantly log your LLM responses across all providers with OpenTelemetry:

litellm.callbacks = ["otel"]

# Your LiteLLM code is automatically traced

response = litellm.completion(

model="gpt-4o",

messages=[{"role": "user", "content": "Hello, how are you?"}]

)

print(response.choices[0].message.content)Examples

Basic Multi-Provider Usage

import litellm

# Use just 1 line of code, to instantly log your LLM responses across all providers with OpenTelemetry:

litellm.callbacks = ["otel"]

def basic_multi_provider_example():

# Define different models from various providers

models = [

"gpt-4", # OpenAI

"claude-3-opus-20240229", # Anthropic

"command-nightly", # Cohere

"gemini-pro", # Google

"llama-2-70b-chat", # Meta (via Replicate)

]

prompt = "Explain the benefits of microservices architecture in 2 sentences."

results = []

for model in models:

try:

print(f"Testing {model}...")

response = litellm.completion(

model=model,

messages=[{"role": "user", "content": prompt}],

max_tokens=150,

temperature=0.7

)

results.append({

"model": model,

"content": response.choices[0].message.content,

"tokens": response.usage.total_tokens,

"cost": response.usage.total_tokens * 0.002 # Estimated cost

})

except Exception as e:

print(f"Error with {model}: {e}")

results.append({

"model": model,

"error": str(e)

})

return results

results = basic_multi_provider_example()

for result in results:

if "error" not in result:

print(f"{result['model']}: {result['tokens']} tokens, ~${result['cost']:.4f}")Cost Optimization with Provider Fallback

import openlit

import litellm

from typing import List, Dict, Any

# Use just 1 line of code, to instantly log your LLM responses across all providers with OpenTelemetry:

litellm.callbacks = ["otel"]

def cost_optimized_completion(

messages: List[Dict[str, str]],

fallback_models: List[str] = None,

max_tokens: int = 100

) -> Dict[str, Any]:

"""

Try models in order of cost efficiency with fallback options

"""

if fallback_models is None:

fallback_models = [

"gpt-3.5-turbo", # Cheapest OpenAI option

"claude-3-haiku-20240307", # Anthropic's fastest/cheapest

"command", # Cohere

"gpt-4o-mini", # OpenAI's smaller model

"gpt-4", # Fallback to premium if needed

]

for i, model in enumerate(fallback_models):

try:

print(f"Attempting {model} (priority {i+1})...")

response = litellm.completion(

model=model,

messages=messages,

max_tokens=max_tokens,

temperature=0.7

)

# Calculate approximate cost (rough estimates)

cost_per_1k_tokens = {

"gpt-3.5-turbo": 0.002,

"gpt-4o-mini": 0.0015,

"gpt-4": 0.03,

"claude-3-haiku-20240307": 0.00025,

"claude-3-sonnet-20240229": 0.003,

"command": 0.015

}

estimated_cost = (response.usage.total_tokens / 1000) * cost_per_1k_tokens.get(model, 0.002)

return {

"success": True,

"model_used": model,

"content": response.choices[0].message.content,

"tokens": response.usage.total_tokens,

"estimated_cost": estimated_cost,

"attempt_number": i + 1

}

except Exception as e:

print(f"Failed with {model}: {e}")

if i == len(fallback_models) - 1: # Last attempt

return {

"success": False,

"error": f"All models failed. Last error: {e}",

"attempts": len(fallback_models)

}

continue

return {"success": False, "error": "No models available"}

# Test cost optimization

result = cost_optimized_completion([

{"role": "user", "content": "Summarize the key benefits of using Docker containers for development"}

])

if result["success"]:

print(f"Success with {result['model_used']} on attempt {result['attempt_number']}")

print(f"Cost: ~${result['estimated_cost']:.4f}, Tokens: {result['tokens']}")

print(f"Response: {result['content'][:100]}...")View Traces

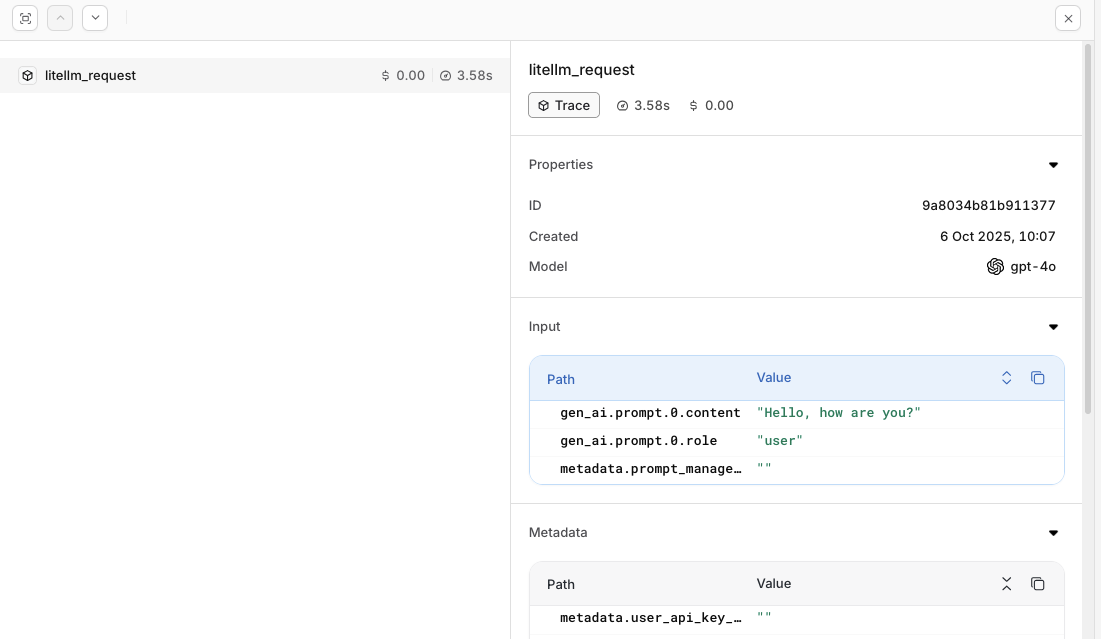

Head to the Traces tab to view LiteLLM traces in the Orq.ai Studio.

Updated 20 days ago