AI Gateway vs Configuration Management

Comparison of the two main ways of integrating orq.ai within your systems.

There are two main ways of integrating orq.ai to your systems. Using the service as a Gateway or as a Configuration Management system.

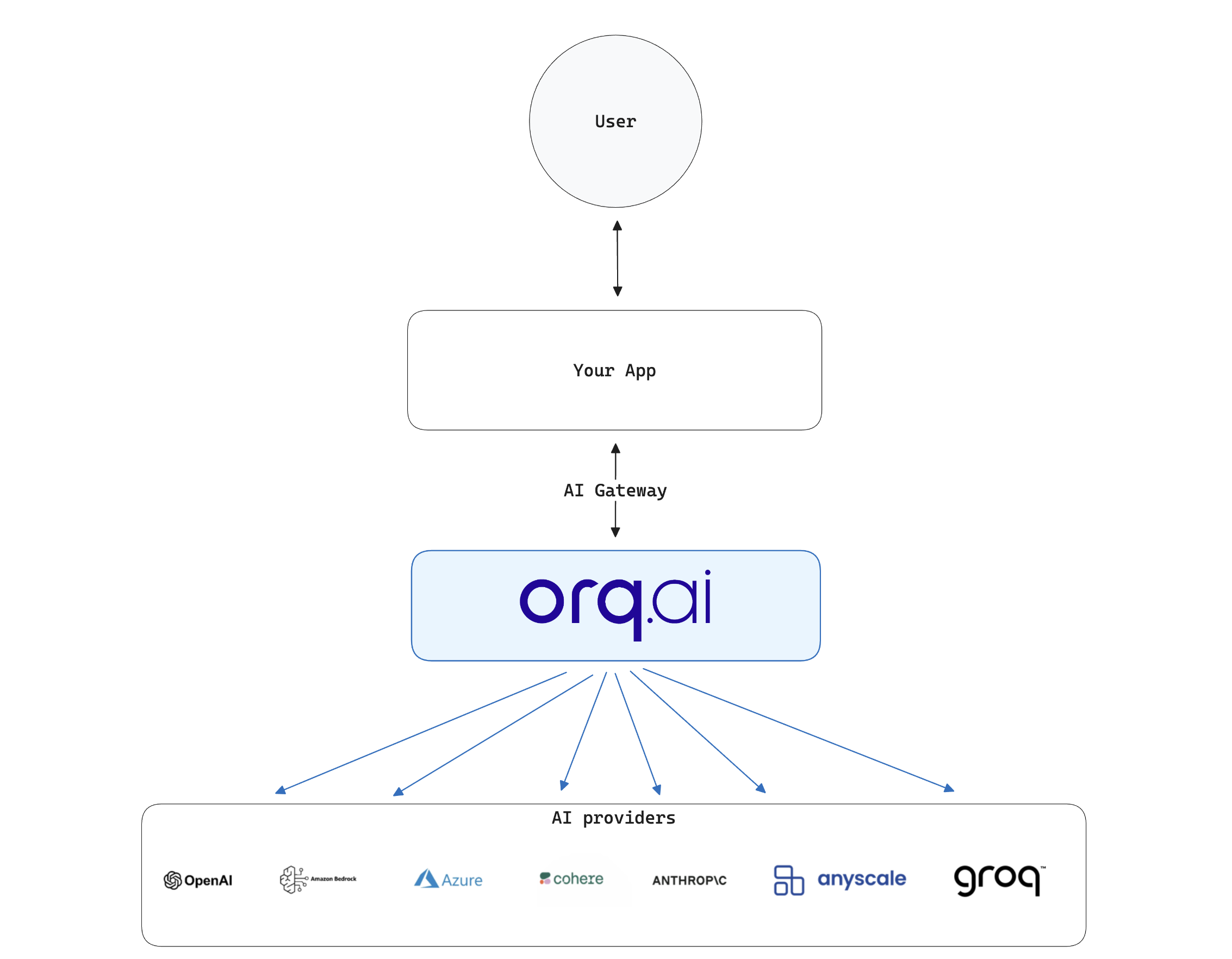

Gateway

The main way of using orq.ai as a AI Gateway would be its simple integration to existing systems. orq.ai's deployment urls will be used to send requests to the LLM backends.

There are multiple advantages to using orq.ai this way:

- It makes managing multiple LLM providers simple, as orq.ai becomes the interface to reach all LLM backend available. This makes your integration with multiple LLM transparent.

- You benefit from the native retry mechanism and error management we offer. If a call fails to one LLM provider, it is retried seamlessly. Moreover, prolonged failure can result into fallbacks to different providers. To learn more see Retries & Fallbacks.

- Logs & Monitoring is native to orq.ai, all calls will be logged and searchable in the panel, this way you keep trace of all your application's activity with LLM and can detect potential issues quickly.

One thing to keep in mind:

- This puts Orq.ai on the critical path of your applications and systems, making its availability and potential fallback path something to consider when designing a fault-tolerant system.

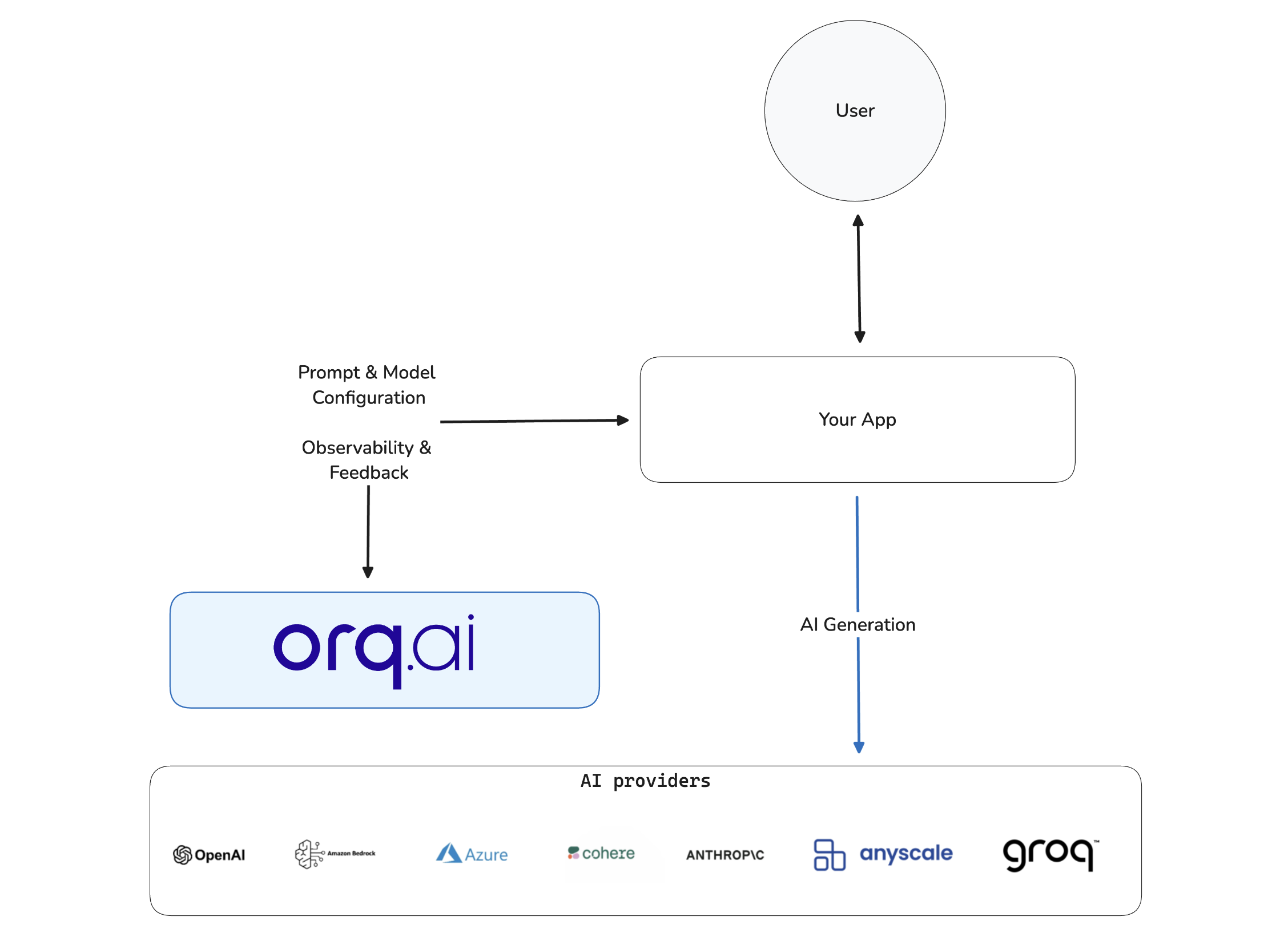

Configuration Management

You can decide to use orq.ai only as configuration management for your various LLM backend.

This has some advantages over using AI Gateway:

- You manage the calls to LLM Models end-to-end, this lets you keep control over the integration and manage its lifecycle, ensuring data stays within your infrastructure before reaching LLM backends.

- You still benefit from the configuration management on orq.ai side and can fetch at runtime the latest configuration from your Deployment.

- You still benefit from Deployment Routing, ensuring your users reach the model you desire, using dynamic Context Attributes.

Updated about 1 month ago