CrewAI

Integrate Orq.ai with CrewAI using OpenTelemetry

Getting Started

CrewAI enables powerful multi-agent coordination for complex AI workflows. Tracing CrewAI with Orq.ai provides comprehensive insights into agent interactions, task execution, tool usage, and crew performance to optimize your multi-agent systems.

Prerequisites

Before you begin, ensure you have:

- An Orq.ai account and API Key

- CrewAI installed in your project

- Python 3.8+

- OpenAI API key (or other LLM provider credentials)

Install Dependencies

# OpenTelemetry, crewai, openinference

pip install crewai openinference-instrumentation-crewai

pip install opentelemetry-sdk opentelemetry-exporter-otlp-proto-http

# LLM providers

pip install openai anthropic

# Optional: Advanced tools and integrations

pip install crewai-toolsConfigure Orq.ai

Set up your environment variables to connect to Orq.ai's OpenTelemetry collector:

Unix/Linux/macOS:

export OTEL_EXPORTER_OTLP_ENDPOINT="https://api.orq.ai/v2/otel"

export OTEL_EXPORTER_OTLP_HEADERS="Authorization=Bearer <ORQ_API_KEY>"

export OTEL_RESOURCE_ATTRIBUTES="service.name=crewai-app,service.version=1.0.0"

export OPENAI_API_KEY="<YOUR_OPENAI_API_KEY>"Windows (PowerShell):

$env:OTEL_EXPORTER_OTLP_ENDPOINT = "https://api.orq.ai/v2/otel"

$env:OTEL_EXPORTER_OTLP_HEADERS = "Authorization=Bearer <ORQ_API_KEY>"

$env:OTEL_RESOURCE_ATTRIBUTES = "service.name=crewai-app,service.version=1.0.0"

$env:OPENAI_API_KEY = "<YOUR_OPENAI_API_KEY>"Using .env file:

OTEL_EXPORTER_OTLP_ENDPOINT=https://api.orq.ai/v2/otel

OTEL_EXPORTER_OTLP_HEADERS=Authorization=Bearer <ORQ_API_KEY>

OTEL_RESOURCE_ATTRIBUTES=service.name=crewai-app,service.version=1.0.0

OPENAI_API_KEY=<YOUR_OPENAI_API_KEY>Integrations Example

We'll be using OpenInference as TracerProvider with CrewAI

from openinference.instrumentation.crewai import CrewAIInstrumentor

from opentelemetry import trace

from opentelemetry.exporter.otlp.proto.http.trace_exporter import OTLPSpanExporter

from opentelemetry.sdk import trace as trace_sdk

from opentelemetry.sdk.trace.export import BatchSpanProcessor

from crewai import Agent, Task, Crew

# Initialize OpenTelemetry

tracer_provider = trace_sdk.TracerProvider()

tracer_provider.add_span_processor(BatchSpanProcessor(OTLPSpanExporter(

endpoint="https://api.orq.ai/v2/otel/v1/traces",

headers={"Authorization": "Bearer <ORQ_API_KEY>"}

)))

trace.set_tracer_provider(tracer_provider)

# Instrument CrewAI

CrewAIInstrumentor().instrument(tracer_provider=tracer_provider)

# Your CrewAI code is automatically traced

researcher = Agent(

role='Market Research Analyst',

goal='Gather comprehensive market data and trends',

backstory='Expert in analyzing market dynamics and consumer behavior'

)

task = Task(

description='Research the latest trends in AI and machine learning',

agent=researcher,

expected_output='Comprehensive report on AI and ML trens with key insights and recommendations'

)

crew = Crew(agents=[researcher], tasks=[task])

result = crew.kickoff()

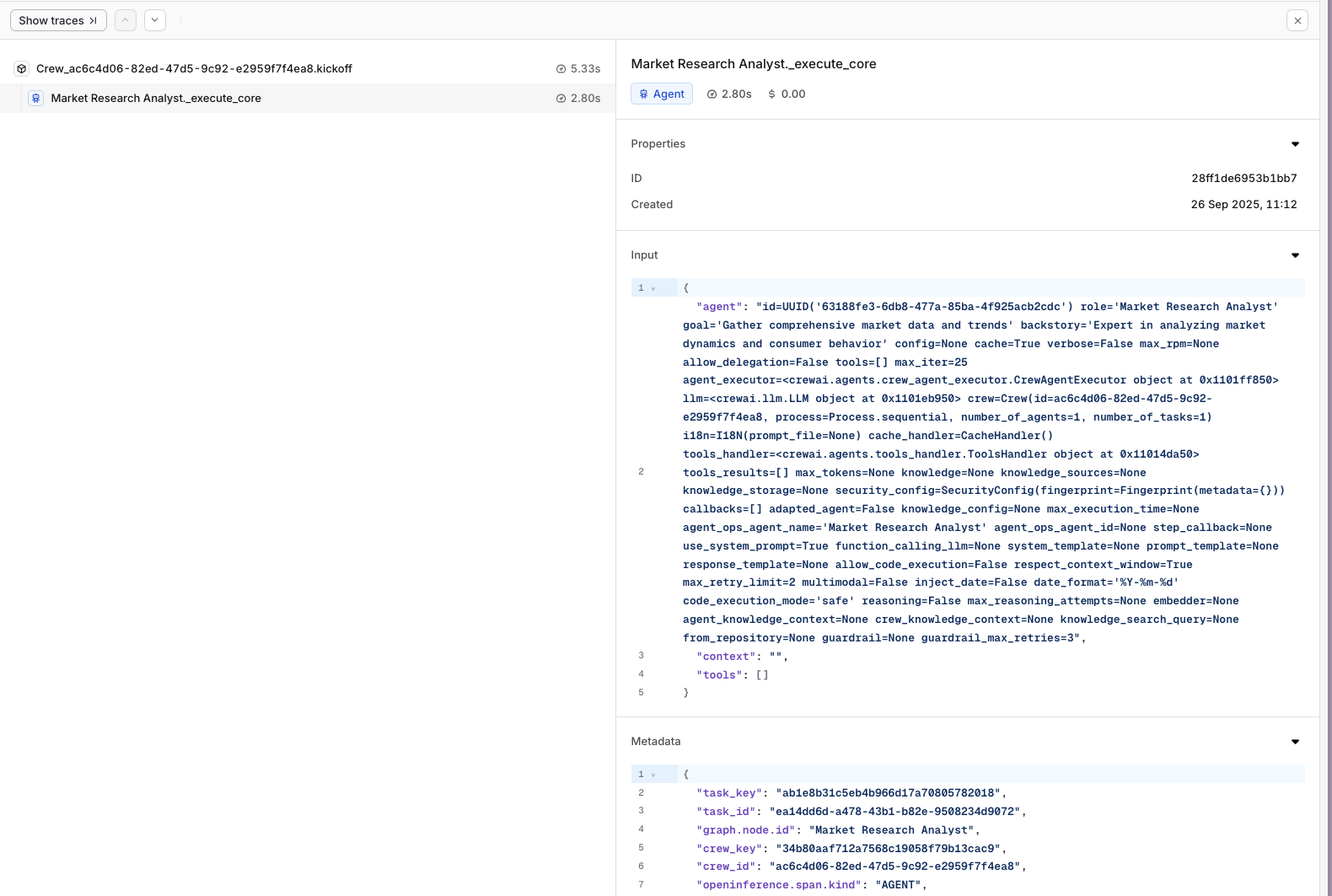

View Traces

Traces from your CrewAI execution will be visible within the Traces menu in your orq.ai studio.

Updated 22 days ago