Pydantic AI

Integrate Orq.ai with Pydantic AI using OpenTelemetry

Getting Started

Pydantic AI is a Python agent framework designed to make it easier to build production-grade applications with Generative AI. Integrate with Orq.ai to gain complete observability into your AI agent interactions, tool usage, model performance, and conversation flows.

Prerequisites

Before you begin, ensure you have:

- An Orq.ai account and API Key.

- Pydantic AI installed in your project

- Python 3.9+

- A supported LLM provider (OpenAI, Anthropic, etc.)

Install Dependencies

# Core Pydantic AI packages

pip install pydantic-ai

# Your preferred LLM provider

pip install openai # or anthropic, groq, etc.

# Core OpenTelemetry packages (required for all integration methods)

pip install opentelemetry-sdk opentelemetry-exporter-otlp Configure Orq.ai

Set up your environment variables to connect to Orq.ai's OpenTelemetry collector:

Unix/Linux/macOS:

export OTEL_EXPORTER_OTLP_ENDPOINT="https://api.orq.ai/v2/otel"

export OTEL_EXPORTER_OTLP_HEADERS="Authorization=Bearer <ORQ_API_KEY>"

export OTEL_RESOURCE_ATTRIBUTES="service.name=pydantic-ai-app,service.version=1.0.0"Windows (PowerShell):

$env:OTEL_EXPORTER_OTLP_ENDPOINT = "https://api.orq.ai/v2/otel"

$env:OTEL_EXPORTER_OTLP_HEADERS = "Authorization=Bearer <ORQ_API_KEY>"

$env:OTEL_RESOURCE_ATTRIBUTES = "service.name=pydantic-ai-app,service.version=1.0.0"Using .env file:

OTEL_EXPORTER_OTLP_ENDPOINT=https://api.orq.ai/v2/otel

OTEL_EXPORTER_OTLP_HEADERS=Authorization=Bearer <ORQ_API_KEY>

OTEL_RESOURCE_ATTRIBUTES=service.name=pydantic-ai-app,service.version=1.0.0Integrations

Choose your preferred OpenTelemetry framework for collecting traces, we'll be using LogFire.

pip install logfireimport logfire

from pydantic_ai import Agent

# Configure Logfire

logfire.configure(

service_name='orq-traces',

send_to_logfire=False, # Disable sending to Logfire cloud

)

# Instrument Pydantic AI automatically

logfire.instrument_pydantic_ai()

# Your agent code works normally with automatic tracing

agent = Agent('openai:gpt-4o-mini')

result = agent.run_sync('What is the capital of France?')

print(result)Examples

Basic Agent Example

from pydantic_ai import Agent

# Setup your chosen integration above, then use Pydantic AI normally

agent = Agent('openai:gpt-4o-mini')

# Simple query

result = agent.run_sync('Explain quantum computing in simple terms')

print(result.data)Agent with System Prompt

from pydantic_ai import Agent, RunContext

agent = Agent(

'openai:gpt-4o-mini',

system_prompt='You are a helpful assistant that always responds in a professional tone.'

)

result = agent.run_sync('What are the benefits of renewable energy?')

print(result.data)Agent with Tools

from pydantic_ai import Agent, RunContext

import httpx

agent = Agent('openai:gpt-4o-mini')

@agent.tool

async def get_weather(ctx: RunContext[None], location: str) -> str:

"""Get current weather for a location."""

# Simulate weather API call

return f"The weather in {location} is sunny and 75°F"

@agent.tool

async def search_web(ctx: RunContext[None], query: str) -> str:

"""Search the web for information."""

# Simulate web search

return f"Search results for '{query}': Found relevant information about the topic."

# Use the agent with tools

result = agent.run_sync('What is the weather like in San Francisco and find recent news about climate change?')

print(result.data)Structured Response

from pydantic_ai import Agent

from pydantic import BaseModel

from typing import List

class BookRecommendation(BaseModel):

title: str

author: str

genre: str

rating: float

summary: str

class BookList(BaseModel):

recommendations: List[BookRecommendation]

total_count: int

agent = Agent('openai:gpt-4o-mini', result_type=BookList)

result = agent.run_sync('Recommend 3 science fiction books published in the last 5 years')

print(f"Found {result.data.total_count} recommendations:")

for book in result.data.recommendations:

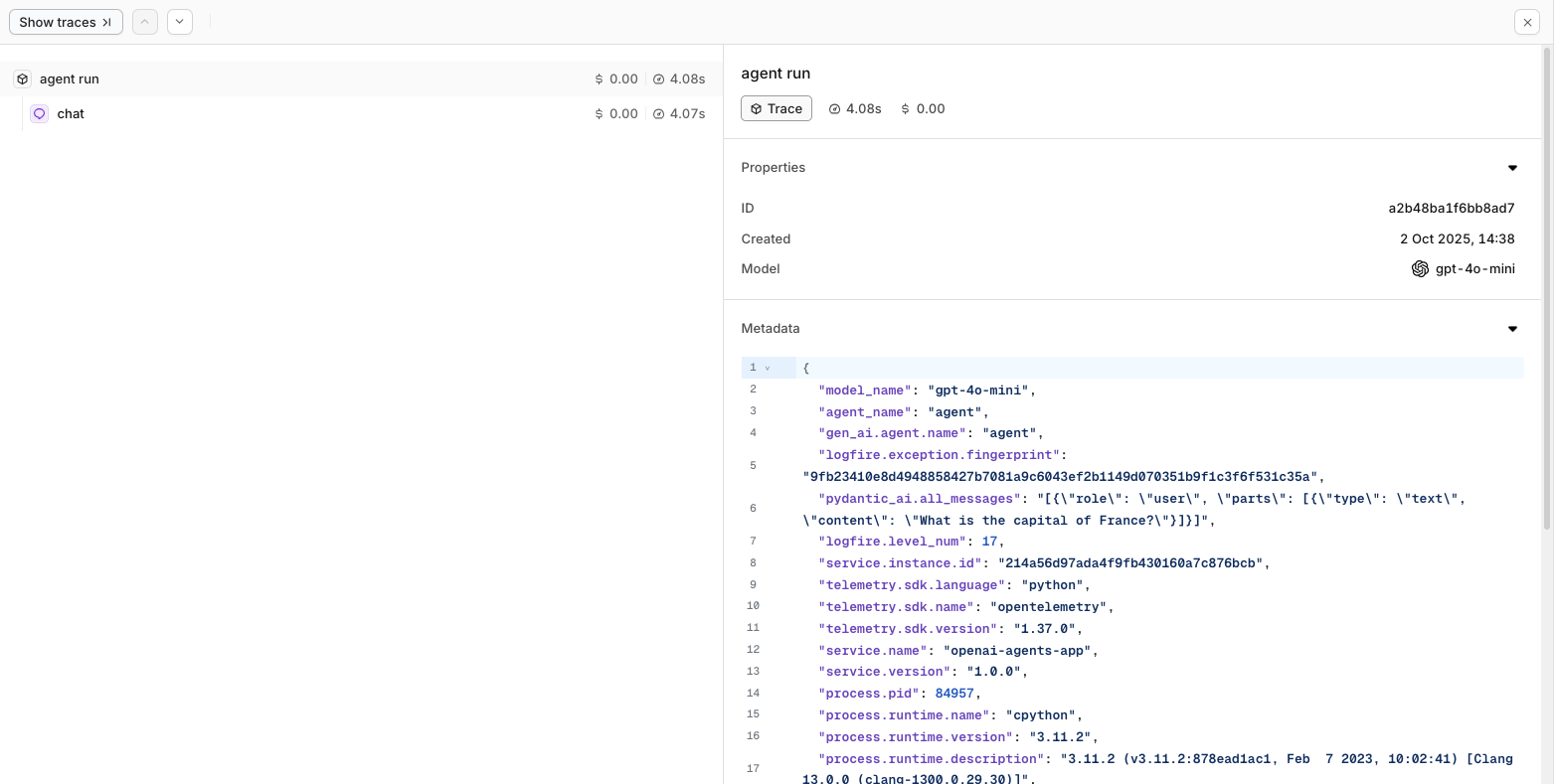

print(f"- {book.title} by {book.author} ({book.rating}/5)")View Traces

View in the Orq.ai Studio in the Traces tab.

Updated 20 days ago